The fact that the simplified equations in the guides are applied very generally, means they have been formulated to be conservative to ensure that the designs that result from their use do not fail.

There are a number of factors that are forcing a change in this approach to hvac design. Newer and cost-effective construction techniques mean that the building form is departing more frequently from the stacked rectangular box, beloved by engineers due to its simplicity and irreducibility. The data that guidance notes are based upon, is less applicable to these new building forms and the spaces within them. Also, there is the constant push from all sides for efficiency and refinement, and to promote a leaner design.

But it's the room within design calculations that currently gives leniency for errors, and is a catch-all that accounts for design variables, which are not known precisely. Reducing this buffer, without trying to reduce the unknowns, is a recipe for disaster. The definition of a successful hvac design is also changing. The linear, narrow rule of 'never over 24°C' is now repeatedly being broken, without complaint, by buildings that have been designed with a more intelligent, more thoughtful measure of success. The use of statistical yardsticks – 'temperature shall not rise by more that 25°C for more than 5% of occupied hours', means that the guides must be replaced by techniques that are more refined, more focused and inevitably, more complex.

The move towards engineering simulation tools is also being driven by the ongoing revision to UK Building Regulations and the environmental credentials to which some clients aspire. This necessitates design teams (including the quantity surveyor) to collaborate in a fashion only previously seen in very specific low energy design projects.

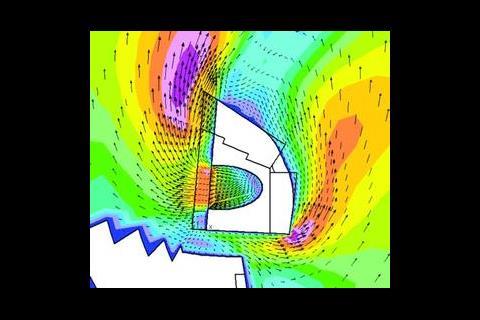

The resulting innovation and need for early assessment of design strategies (see figure 1) has helped drive a steady development of commercial software over the last 20 years. An additional factor that will further increase the demand for building performance simulation will be the forthcoming implementation of the new EU Building Directive, which will enforce minimum energy standards and energy certification on most publicly used buildings over 1000 m2 by 2005, in the UK.

The zonal approach

Zonal modelling, the approach taken by such industrial standard packages as Tas and IES/Facet, is the natural first step on the road to complexity. The clear advantage of these methods is that they are transient, allowing the building to be simulated through time, in relatively little computer time, to gain an understanding of its year-round behaviour. Statistical data regarding the building's behaviour under a variety of climatic conditions flows from this. However, much is sacrificed to achieve this: every space, no matter what its shape or size, is reduced to a point, linked to other points with which it exchanges thermal energy, mass, momentum and other properties.

As a design tool, this rather gross-sounding assumption works well, for in a reasonable turnaround time, a comprehensive information dataset can be generated. Its salient points can easily be extracted, informing the design process and suggesting optimisation to the building design through any number of iterations. The behaviour of these points or zones can be influenced by building management control algorithms built into the software. A decent approximation of radiation effects can also be made.

Commercial zonal modelling software has now developed to the point where an integrated design approach can be supported within a single modelling environment. Most software packages now provide highly developed supplementary modules that can calculate annual solar regime, appropriate hvac sizing, daylighting and luminare performance, detailed mass flow, building energy management systems/controls, assessments on Building Regulations compliance and in some instances, have a limited computational fluid dynamics (cfd) capability.

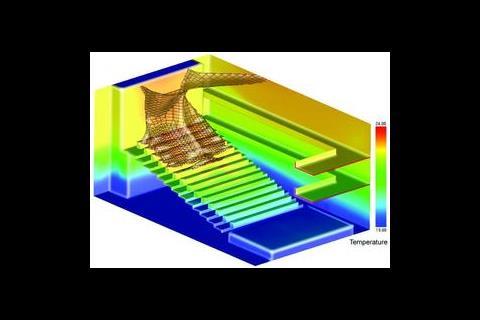

Of course, the point assumption for zonal models does not always hold. It cannot represent any spatial differences in conditions within a single space. Hence, the details of relatively large scale fluid flows (such as in atria, auditoria, around buildings) and small scale (macro-flow around diffusers, stratification) are lost entirely. To have any hope in representing these, cfd is required.

CFD and building services engineering

Computational fluid dynamics came in to its own during World War II, with American scientists trying to predict how plutonium behaves under conditions of extreme temperature and pressure, ie in a bomb. The equations were too complex to solve directly, and a numerical method was instead developed and the problem solved by an early computer . The technology continues to be developed and applied in the aeronautical industry, with automotive coming a close second.

The uptake of cfd in the building industry has been slow, and for good reason. The development of a prototype aircraft or car body soaks up millions of pounds in research and development and occurs relatively infrequently. In our industry, just about every building is a prototype, significant design changes can occur at almost any time (the windows do not open as far as was hoped, for example), with margins always being pared to the minimum.

One great advantage of cfd is that it is capable of representing quite small scale features of a flow, such as a plume or flow from a diffuser. To do this, the 3D space that is to be modelled is divided into a large number of small contiguous volumes (like small bricks, tetrahedra, or others). While it is possible to run a 2D representation of a 3D space, very few hvac flows are strictly 2D – this can be a gross assumption leading to large errors. To build the grid, the most useful starting point is to use a 3D cad model representing the surfaces of the geometry of the space. Such models are more frequently being produced by architects for visualisation purposes during project competitions. A data 'clean-up' process is required to remove spurious and potentially problematic information.

Building the grid itself can be achieved manually, patching sections of space together in a relatively laborious process, or else a more automated choice may be available. There has been a trickle-down of automated grid generation software from the aerospace and aeronautical industry that actually works and comes at a competitive price. It is still the case though that generating the grid takes too much time, discouraging an iterative design process that is facilitated so well by simple zonal models.

Once the grid is generated, each point in the grid is assigned a value for air velocity, temperature, pressure, etc. These are an initial estimate, and an iteration process begins, where the guesses are updated according to the governing equations of mass, momentum and enthalpy conservation. Eventually, though not invariably, the size of the corrections diminish to the point where they are insignificant, and the calculation is deemed to have converged. Unfortunately for us, flows driven by buoyancy ie the stack effect, are problematic when it comes to acquiring convergence.

As a result of variable density, a buoyancy term is introduced into the momentum equations that binds them to the energy, or enthalpy equations. Small changes in one flow variable can greatly unsettle the others, with feedback loops often making convergence impossible. The unsteadiness is a feature of almost all buoyant flows. A lack of convergence does not always imply that the predictions are incorrect.

Turbulence models

Air flows in and around buildings are almost always turbulent rather than laminar. All cfd codes have turbulence models built in which can be used to account for the effects of turbulence. They may be activated by the flick of a switch on the computer screen. This can be a problem, as there is often a great many turbulence models available, each tuned to provide good predictions for certain types of flows. There are some turbulence models (the k-e model for example) which are seen as a general 'all-rounder', but even so its use in inappropriate situations can result in large errors. Choosing the most appropriate turbulence model is no easy task. At the very least, the user should have many examples of what kinds of models work well with certain types of flows – or else the quality of the results will be questionable.

Furthermore, many internal flows are unsteady eg single sided natural ventilation is driven by turbulent gusts that individually drive small amounts of air into and out of openings on the facade. The eddy-viscosity type models mentioned above cannot represent such flows. The great hope in this area is large eddy scale (les) modelling – essentially, the computer grid is much finer than before, so that much smaller features of the turbulent and now unsteady flow are represented directly, with a mathematical model now being used for only the smallest scales of turbulence. They are very expensive computationally, but they are the only way to represent some key features of turbulent flows. LES models are making their way into the industry – the technique is used by the US National Institute of Science & Technology's fire engineering code, Fire Dynamics Simulator.

Don't believe the hype

The increase in computing speed and memory is allowing more sophisticated and complex tools to be used in hvac design. Advances in graphics can make engineering simulations look so realistic, that their accuracy would seem to be beyond question. One commercial cfd code developer pointed out that whenever he ran a simulation, he would approach the initial results with extreme scepticism, and only accept them if they withstood a battery of probing and testing. It is not clear if our industry has the outlook or the time for that approach, but it is vital if standards are to be maintained.

The problem with increasing complexity is that the number of variables in a simulation quickly get out of hand. The volume of information that results can overwhelm to a point where it becomes more difficult to know whether the simulation has shown a design to be successful, or if not, then what exactly should you change to fix it. With CIBSE guides, the world was a lot simpler. The choice between zone models and cfd is like taking a photo with a poor quality digital camera. You can choose to stand back and get a good picture of the whole scene – but look at your photo too closely and you find the resolution is not there. Instead you can choose to look at a particular feature in detail, but then the overall picture is lost.

A big question is, as building energy and performance simulation tools become more widely applied, who will lead this development? Historically, software developers have led with products slowly developed over time from initial research orientated programs. With more stringent Building Regulations coupled with building designs becoming ever more innovative, the demands on engineering software will inevitably increase. It is clear that developers, practitioners and regulators will need to collaborate ever more closely to design robust software tools that provide for margins of error while introducing the appropriate calculation variables required to understand more detailed building energy dynamics.

Downloads

Figure 1

Other, Size 0 kb

Source

Building Sustainable Design

Postscript

Ben Cartmell and Dr Shane Slater are associates of Whitby Bird Building Physics.

No comments yet