Demand for data centres continues to reflect the growing and changing needs of a connected world. Aecom’s Luke Kubicki (engineering lead), Amy Daniell (strategy director) and Giles Scott (development director) examine the latest trends in low-energy data centre design

01 / Introduction

Data centres are vital to modern life and underpin how we, in the developed world, work, play and interact. Internet users are forecast to grow over 17% year-on-year between 2015 and 2018, and internet enabled devices by 27% over the same period - this technology is used to access, store and process data, all of which passes through one or more data centres.

Data centres provide secure and resilient operating environments for IT equipment, without drawing undue attention to the sensitive nature of their contents. Although essentially industrial buildings, data centres are highly functional and design requirements are changing quickly. Furthermore, an ever increasing need for energy efficiency and moves towards cloud-based environments adds complexity to the market for data centres.

Total cost of ownership increasingly weighs on decisions to procure and build, which moves project assessment increasingly towards operating costs. The need to future-proof developments introduces the need for flexible and scalable design solutions. Risks can be mitigated by securing planning permission for the complete roll-out, and then incrementally building-out in response to changing demand requirements.

As the data centre sector matures, the clarity of its landscape has increased, as end-users are better placed to choose between construction of their own data centre or engaging with a third- party provider, whether through colocation, managed or cloud services. These decisions are underpinned by a better understanding of the total cost of ownership over a larger economic time horizon, typically 5-10 years. Institutions and funds are therefore able to invest with greater certainty, as unknown costs are minimised with the right level of management capability. However, this also means that data centres are becoming more commoditised. Competition is fierce as third party and outsourcing organisations strive to lower costs and differentiate themselves from the competition.

02 / Market overview

Demand for data centres continues to be driven by mobile technology, media streaming, gaming and other activities we see as necessary to modern life. A recent change, however, is that these are often being delivered through a cloud platform. Cloud platforms tend to adopt less resilient facilities than the market norm. Though the growth in construction is significantly higher in developing markets (particularly East Asia), European prospects (including the UK) are good, with growth predicted at 12% compound annual growth rate (CAGR).

A more competitive landscape, financial constraints (associated with the recession) and a move to more scalable data centre deployment has resulted in a step-change in the size of speculative investment in the sector. Projects in the UK and Europe are much more likely to be built to match an immediate requirement rather than investors trying to determine the timing of the next period of rapid expansion in the sector. Consequently, the number of speculative developments was low in recent years. Also, there is growth in less resilient data centres to accommodate cloud platforms.

EU regions continue to attract data centre investment as the use of IT continues to rise. More capital has been available and competition for deals has increased. But constraints on data-centre growth stem from the availability of power from renewable sources and harnessing for use by data centres. In less mature markets, fibre connectivity is also a key consideration. As a new driver to location, we are seeing data sovereignty becoming increasingly important. Following the hacking and release of Sony data in late 2014, the market is also closely watching the outcome of the Microsoft data sovereignty ruling in the Republic of Ireland. These issues are likely to affect and alter demand characteristics of data centres in the coming years.

03 / Design

An increased focus on total cost of ownership has forced organisations and their designers to be cognisant of the cost impact of design decisions. Trade-offs must be made and decisions tailored for a given business, its needs and the IT infrastructure supporting it. This means that a data centre for a global cloud organisation will look very different from that of an investment bank. Local market knowledge also helps towards designing an efficient and balanced solution. Key design factors include:

- Location: historically, data centres often needed close proximity to metropolitan areas because of slower connection speeds and issues with latency (the delay in data transfer over the network). These issues are now less of a concern, save for specialist requirements such as high frequency trading. Data centres can therefore be located in more remote locations, providing a great number of lower cost benefits - often relating to land, power and labour. Data centres can also be located in areas with low ambient temperatures allowing free cooling.

- Resilience: in the 1990s and early 2000s, high reliability was an essential requirement but often at great cost, both financially and with regards to efficiency - fully redundant systems in the financial services sector were a prime example. More recently, organisations only build in the necessary resilience to suit the systems and IT architecture they serve. Related to this is ensuring simplicity - human error during operation is a major source of downtime risk, so the use of simple solutions is a preferred design strategy.

- Extent of technical area: in other words areas known as “white space” or data halls.

- Power and heat load density: recent data centres range from 1,500 to 2,000W/m2, determined by planned server density average loads. Additional high density provisions are accommodated for cabinets with cooling loads of up to 30kW.

- Scalability: combining the ability for sustained growth, maximising usage of plant capacity and minimising service interruption is critical. Most systems do not initially run at full capacity, so scaling the infrastructure defers capital expenditure, improving the business case, while improving efficiency.

- Flexibility: flexibility of layout and floor loadings stems from the building form, with facilities often housed in warehouses and industrial buildings. The need to refresh IT servers, often on a three-year cycle, introduces a design requirement that enables higher frequency equipment change. As the infrastructure tends to operate for 10 or even 20 years, consequent technology upgrades may occur up to 10 times during the data centre’s operational life.

- Energy efficiency: power usage effectiveness (PUE) measures the ratio of total input power input divided by power used by the IT equipment. Eighty per cent of the infrastructure’s total lifecycle cost is due to operating costs. Primary design issues therefore need to balance capital expenditure with the selection of solutions and plant and equipment that take account of actual anticipated usage.

- Data centre monitoring, control and systems integration: there has been focus on DCIM (data centre infrastructure management) systems or integration platforms that collate information and manage processes associated with the data centre infrastructure and IT. Depending upon the level of integration, and the extent of the information that is being generated and managed, this can be a significant cost.

- Ancillary Spaces: plant space, storage and IT assembly areas, administration accommodation and fallow, or swing space, aid the effective long-term operation of a facility. Although relatively low impact cost drivers on their own, since the building itself typically accounts for no more than 20% of overall costs, adequate and well-planned space ensures that operating costs are minimised and balanced with overall capital costs.

04 / Resilience and availability

The industry accepted definition of data centre availability is the Uptime Institute’s four-tier system. This describes the overall resilience of the building infrastructure only and does not account for the IT systems, nor does it indicate an overall probability of downtime. This lack of granularity has led many organisations to tailor their own standards for resilience aligned to their business and the likely failure modes of their systems. For example, an organisation may install redundant electrical distribution but only a single chilled water pipework loop, knowing the probability of failure of the electrical systems is higher than the mechanical systems, using their chosen design approach.

Systems that are affected by redundancy / resilience requirements include:

- Physical separation: the minimisation of risks associated with the location of equipment and distribution paths. Fire separation may need to be included between redundant equipment, and power and cooling distribution may have to run in different fire zones.

- Power supplies: HV requirements can vary, with some facilities connecting to the primary grid for a more reliable supply; some may require two separate HV supplies, each rated to the maximum load. A fully diversified supply is a higher cost solution.

- Standby plant: a single generator “N” is usually adequate for Tier 1 data centres. Tier 2 facilities tend to need two generators, or N+1 depending on load/rating. The primary power source for Tier 3 and Tier 4 data centres is the generation plant, and the mains power acting as an “economic” alternative power source. Higher-tier ratings demand redundant plant to meet concurrent maintenance and fault tolerance criteria.

- Central plant (chillers, UPS and generators): varying levels of back-up plant is used to offset risks of component failure or maintenance cover. Data centres designed to Tier 3 and below use an N+1 redundancy strategy, whereby one unit of plant is configured to provide overall standby capacity for the system. The cost of N+1 resilience is influenced by the degree of modularity in the design. For Tier 4 data centres, systems must be fault tolerant, which generally results in fully redundant plant and distribution.

- Distribution paths (pipework/ductwork and cabling/switchboards): power supplies and cooling may be required on a diverse basis, which avoids single points of failure while also providing capacity for maintenance and replacement. Cost impacts stem from the point at which diversity is provided. Tier 3 and 4 facilities require duplicate systems paths feeding down to practical unit (PDU, CRAC unit or AHU) level.

- Final distribution: power supplies to the rack are the final links in the chain. Individual equipment racks may or may not have two power supplies.

- Water supply can be a key factor in system resilience, particularly where evaporation-based cooling solutions are used.

User requirements and continuous, real-time IT processing capabilities are increasing all the time. Cloud and interent organisations manage resilience on both the IT and infrastructure level; they mirror data across multiple sites and handle resilience within the IT platform. Consequently, they have multiple facilities with lower resilience, reducing cost and often providing an overall greater level of resilience.

05 / Sustainability

Data centres account for approximately 2% of total global energy use. Performance, security and location were once the headline requirements for IT client organisation. Now, though, a multi-dimensional decision process needs to allow for energy reduction and sustainability, amongst other things. Broader environmental concerns - such as reducing carbon footprint or corporate requirements to achieve sustainable operations - are increasingly seeing new data centres registering for BREEAM or LEED environmental assessments. Third-party providers of data centres also understand the advantages of similar concepts in their service offer. The opportunity for energy reduction exists to a far greater degree than other building types because of the significantly higher demand and use of energy in data centres in the first place.

Infrastructure:

Infrastructure efficiency is an area of increasing focus for client organisations and design teams. A key metric for this has been PUE, which is the total data centre power requirement divided by server consumption. The Uptime Institute Annual Survey reports that the average operational PUE is 1.7. In the UK, new data centres will typically target a PUE of between 1.2 and 1.5, with the most efficient designs aiming for 1.15 or lower.

Approaches to achieving energy savings in data centres include:

- Appropriate definitions of fault tolerance and availability: energy savings can be achieved via architectures that tolerate lower security levels.

- Management of airflow in the computer room: limiting the mixing of hot and cold air in data centres is achieved using appropriate containment strategies. Significant efficiencies can be gained when combined with orientation of equipment and racks that are cooled by air from a “cold aisle” and hot air which is then expelled into a “hot aisle”.

- Challenging internal conditions: historically data centres have been required to operate at fixed temperatures, typically about 22°C ±1°c and 50% relative humidity ±10%. Standards introduced by American Society of Heating, Refrigerating, and Air-Conditioning Engineers (ASHRAE) allow for the data centre to operate within a much wider envelope of 18-27°C under normal conditions. The standards also allow for operation at temperatures above those recommended for short periods, which can improve the efficiency of the cooling solutions. As equipment manufacturers warrant their products for higher and higher temperatures, this trend will continue - tempered only by the requirement for safe operating conditions for personnel in data centres which are already upwards of 35°C (and can be as high as 45°C) in the hot aisles.

- Direct and indirect fresh air cooling: increasingly, systems are being installed that allow the heat from the data hall to be removed directly using air only, without the use of a cooling water circuit. In more temperate climates, this can be achieved without the use of refrigerant-based cooling. So while this option is often more expensive, due to air distribution requirements, significant savings are made on the electrical side (due to the lack of high compressor loads) resulting in a net saving.

- Adiabatic cooling: can improve the efficiency of the heat rejection process but as water-use attains higher global scrutiny, technology that utilises water evaporation will be looked on less favourably.

- Free cooling chillers: using ambient air temperatures to cool equipment is a very low energy usage solution, and without the need to operate refrigerant cooling.

- Variable speed motors: these enable chillers, air handling plant and pumps to have their outputs modulated to suit the actual cooling requirements. Energy savings are achieved through part-load operation of the data hall and also offsetting plant over-provision associated with redundant units.

- High-efficiency uninterruptible power supplies (UPS): Significant energy losses stem from the necessary provision of UPS to the data load and air-conditioning equipment. At low loads, which frequently occur due to the need to run multiple systems to maintain the relevant tier rating, the UPS efficiencies can drop significantly. Considered selection of high-efficiency or ECO-mode UPS equipment can mitigate UPS inefficiencies arising from low loads, particularly where multiple systems are operating to maintain a tier rating.

IT Systems:

As marginal improvements in infrastructure efficiency decrease, optimisation of server performance is becoming more important. This is concerned with getting maximum processing power per unit of investment in servers and running costs. Cloud services and virtualisation of server architecture (so that processers are shared), are improving utilisation and operational efficiency. However, more must be done to turn off servers that aren’t being used and to use server standby functionality. Improving this is a key focus

for optimising data centre performance in the future.

06 / Upgrades and improvements

As a large number of data centres near end of life, they suffer an increased risk of failure, reduced efficiency and often don’t represent best value to the business they serve. However, they can represent an excellent opportunity. A recent white paper by AECOM discusses the issues and solutions to this problem, and reports that an upgrade or refurbishment of the existing facility can cost significantly less than a new-build and can be achieved in a shorter period since there is no need for planning approvals or additional utility connections.

07 / Cost breakdown

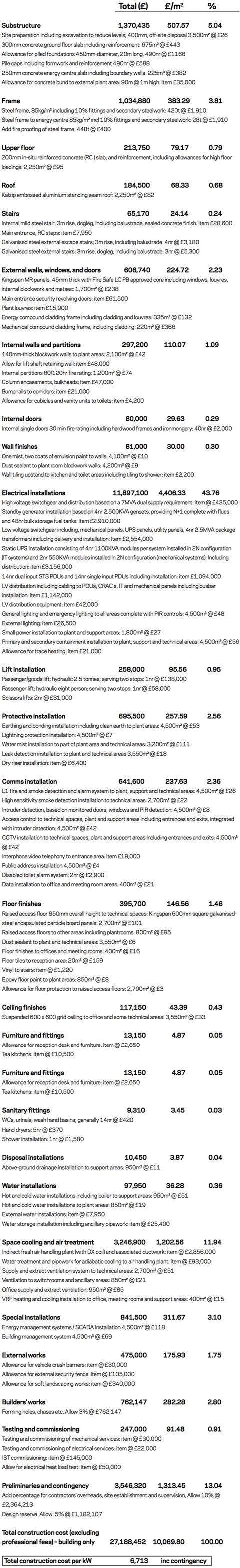

This cost model is based on a Tier 3 two-storey, new-build data centre for loads up to 1,500 W/m2. The scheme comprises two technical spaces, each with a gross internal area of 1,350 m2, 850m² of associated internal plant areas, 600m² of external plant deck and 950m² of support facilities. Electrical infrastructure to the site is via A and B supplies with on-site dedicated substations. Further resilience is provided by uninterruptible power supplies and diesel generators at N+1. The technical areas are completed including full fit-out and are capable of delivering up to 20kW per rack.

The m2 rate in the cost breakdown is based on the technical area, not gross floor area.

The costs of site preparation, external works and external services are included.

Professional and statutory fees and VAT are not included.

Costs are given at first quarter 2015 price levels, in the UK. The pricing level assumes competitive procurement based on a lump-sum tender. Adjustments should be made to account for differences in specification, programme and procurement route.

07 / Cost model

No comments yet